Snapshot protection for PSO using Ansible

A Pure Storage customer who has deployed Kubernetes in two datacenters, asked me if we support replication in the Pure Service Orchestrator (PSO) directly from Kubernetes.

The short answer is “no”, since currently you cannot setup replication directly from within Kubernetes. While this is something that is on the roadmap I believe, I told the customer that they can use a simple Ansible playbook to achieve this if they wanted to.

TLDR? You can get the playbook here:

https://github.com/dnix101/purestorage/blob/master/ansible/pso_async_replication.yaml

Obviously, such a statement introduces the next question…. Do you have an example. Therefor I thought I’d create one and share it with anyone interested.

Prepare your FlashArray

The one thing this script requires it that you setup a protection group on your FlashArray, so start there unless you already have a protection group in place.

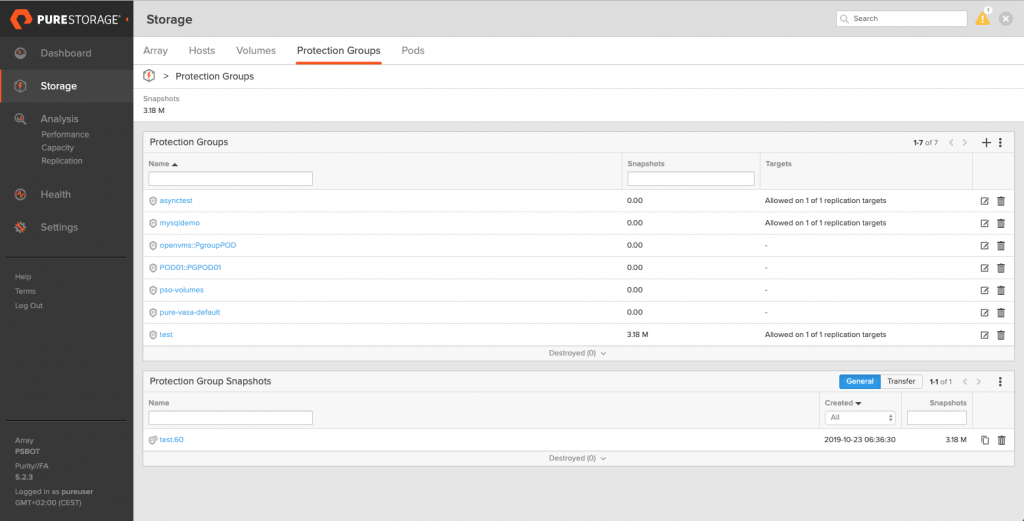

Login to the array, go over to Storage and select the Protection Groups tab.

Click on the + icon, give the protection group a name and click create.

Next (this is optional though) if you have multiple arrays and want to replicate the snapshots to another array, click on the three dots under Targets and select Add…

Select the FlashArray you wish to replicate too, as shown below and click Add.

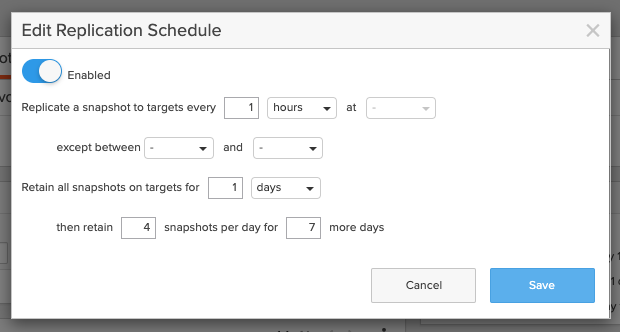

Now set a Snapshot schedule by clicking on the edit icon next to Snapshot Schedule. Sret the schedule that suites your needs and click Save.

Do the same for replication if you want to use replication. If not, just leave it disabled and your fine.

Over to the ansible-playbook

So now let’s jump into the Ansible magic. In the first part of the playbook I define some variables as shown below.

- name: Add PSO volumes to a protection group

hosts: localhost

gather_facts: no

vars:

# IP address of URL to source Cloud Block Storage/FlashArray

fa_url: 10.10.1.1

# API token used for source Cloud Block Storage/FlashArray

fa_api_token: xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx

# The namespace specified for PSO in the values.yaml file for

# the backend storage namespace

namespace:

pure: psodemo

# The protection group to add the volumes to

protectiongroup: pso-volumes

create_snap: true

To use the playbook, you obviously need to enter IP address (fa_url) and the API token (fa_api_token) for the FlashArray you want to work with. Then you specify the namespace.pure parameters, which should be the same as the variable you used in your values.yaml file when installing the PSO. All volumes created on the FlashArray are prefixed with this value (eg. when using psodemo as stated in the example, volumes will be created as psodemo-pvc-xxx). Finally you can specify if you want to create and replicate a snapshot direct after a volume was added to a protection group. This is optional, but the way it is setup is that it won’t just create a snapshot every time the playbook runs, but only when a volume is added to the protection group.

tasks:

- name: Get list of volumes on the array

purefa_info:

gather_subset: volumes

fa_url: "{{ fa_url }}"

api_token: "{{ fa_api_token }}"

register: volumesThe first actual task in the script is te fetch all the volumes details from the FlashArray and store the JSON output in a variable called volumes.

- name: Add volumes to protection group

purefa_pg:

pgroup: "{{ protectiongroup }}"

volume: "{{ item }}"

fa_url: "{{ fa_url }}"

api_token: "{{ fa_api_token }}"

when: item is match(namespace.pure)

loop: "{{ volumes | json_query('purefa_info.volumes.keys(@)') }}"

register: volumes_addedThe next part is going through the list of volumes using the “loop” command with the volumes variable and a json_query to return the volumes names, which are returned as keys. I also added a when clause to only include volumes that start with the string specifiek in the namespace.pure variable. The actual tasks calls on the puref_pg module to add all volumes. However the way Ansible works is that it will only actually add the volume if it wasn’t in the protection group to start with.

The result of the purefa_pg command is stored in the variable “volumes_added” in the last line.

# The following task is optional an will create a snapshot for the

# protection group. Please note if you include this, a snapshot

# will be created each time the playbook is run however,

# since we use apply_retention the snapshot will follow the

# retention policy of the pg.

- name: Create a snapshot and replicate changes

purefa_pgsnap:

name: "{{ protectiongroup }}"

remote: yes

apply_retention: yes

fa_url: "{{ fa_url }}"

api_token: "{{ fa_api_token }}"

when: create_snap and volumes_added.changedThe third and final command creates a snapshot using the purefa_pgsnap command. However by using a when clause I can specify to only create this snapshot if the “create_snap” variable is true AND if “volumes_added.changed”, the variable in which we stored the output of the purefa_pg command is true. This way this task is only executed if a volume was actually added to the protection group.

Please note: In this last tasks I specify the parameters “remote: yes” and “apply_retention: yes”, which are only available from ansible version 2.9 and higher. If you leave these out the playbook will also work for Ansible 2.8, however the snapshot will not be replicated and no retention will be applied to the snapshot. Meaning that you’d have to manually or by way of scripting you’d have to delete these snapshots.

Using the script for continuous backup

The script will basically detect volumes that are currently not in a protection group and add only the (new) volumes that are not yet in the protection group. The snapshot ad replication will only be triggered if new volumes were added to the protection group. This means you could schedule this script to run every hour of every day for example to automatically create a backup strategy for your containers with persistent storage.